The research team at Google Brain just learned how to add realistic detail to low-resolution images using neural networks. It definitely doesn’t work as well as the image enhancing technology you’ll see featured on an episode of CSI. But it’s still intriguing and somewhat creepy to see Google’s AI take a stab at turning pixelated blobs into recognizable faces.

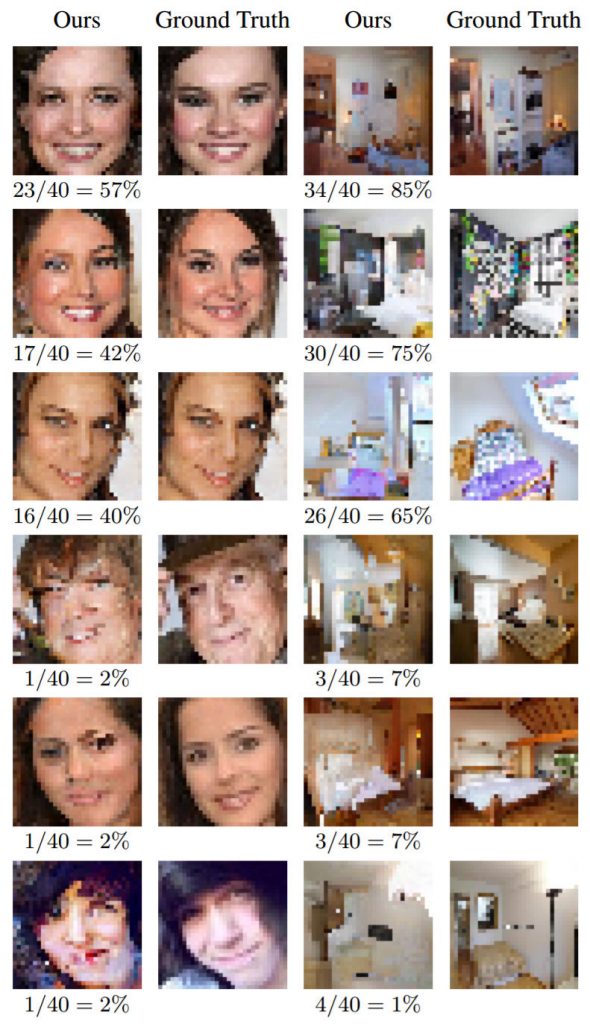

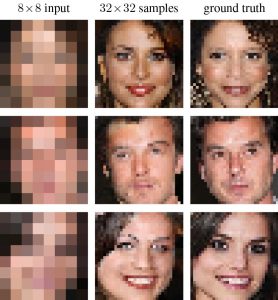

The Google Brain team fed the system 8×8 images of cropped celebrity portraits and bedrooms which it converted into detailed 32×32 pictures. Of course, there’s no way to truly reconstruct a picture that’s simply missing data. What the system did instead was decide on what the person or room could plausibly look like based on prior pictures and what it knows about things like the shape of the nose and the color of lips. In technical parlance, this is called “hallucinating”.

“When some details do not exist in the source image, the challenge lies not only in ‘deblurring’ an image, but also in generating new image details that appear plausible to a human observer,” writes the Google Brain researchers. “Generating realistic high resolution images is not possible unless the model draws sharp edges and makes hard decisions about the type of textures, shapes, and patterns present at different parts of an image.”

Google took a two-part approach to come up with these photogenic snaps. A conditioning neural network compared the low-res image against a high-res images scaled down to the same 8×8 size to see if any elements in the high-res image might be in there. Then a prior network used PixelCNN to create realistic details. It refers to a class of images – like celebrities and bedrooms – and adds details to the upscaled image based on what it’s seen before. The final output is a mashup of the two neural networks.

In real-world tests, human observers were fooled 10 percent of the time into thinking the Google Brain celebrity portrait was really shot from a camera (50 percent would have meant the AI perfectly confused them). The bedroom versions fared better with 28 percent of human observers being fooled. The scores are not too bad given these images are not real life.

The technology is still in its beginning stages but the potential applications are enticing, especially in the field of law enforcement. Just like an artist will talk to an eyewitness to create a composite sketch, we could one day see this technology create possible facial composites using fuzzy surveillance footage. And for the everyday photographer, maybe we could see Google partner with Adobe to release one hell of a sharpening tool in Photoshop.